My question

In the device manager, as well as in the Advanced Screen Settings, Windows tell me that my external monitor is connected to/run by the least powerful of the two graphics cards in my laptop (namely the Intel UHD Graphics 620, my integrated GPU), but I wan't to switch it over to the other: a Nvidia Geforce MX150.

I've found a lot of guides online for declaring that certain programs should be 'rendered' by the Nvidia GPU (using the Nvidia Control Panel), but I can't seem to find out how to change which graphics card runs the monitor itself. To my understanding, if I change which GPU runs a specific program, that GPU will be in charge of calculating what should be on-screen in the window, but it will still have to pass that information through the GPU that actually renders the screen.

Below are some screenshots:

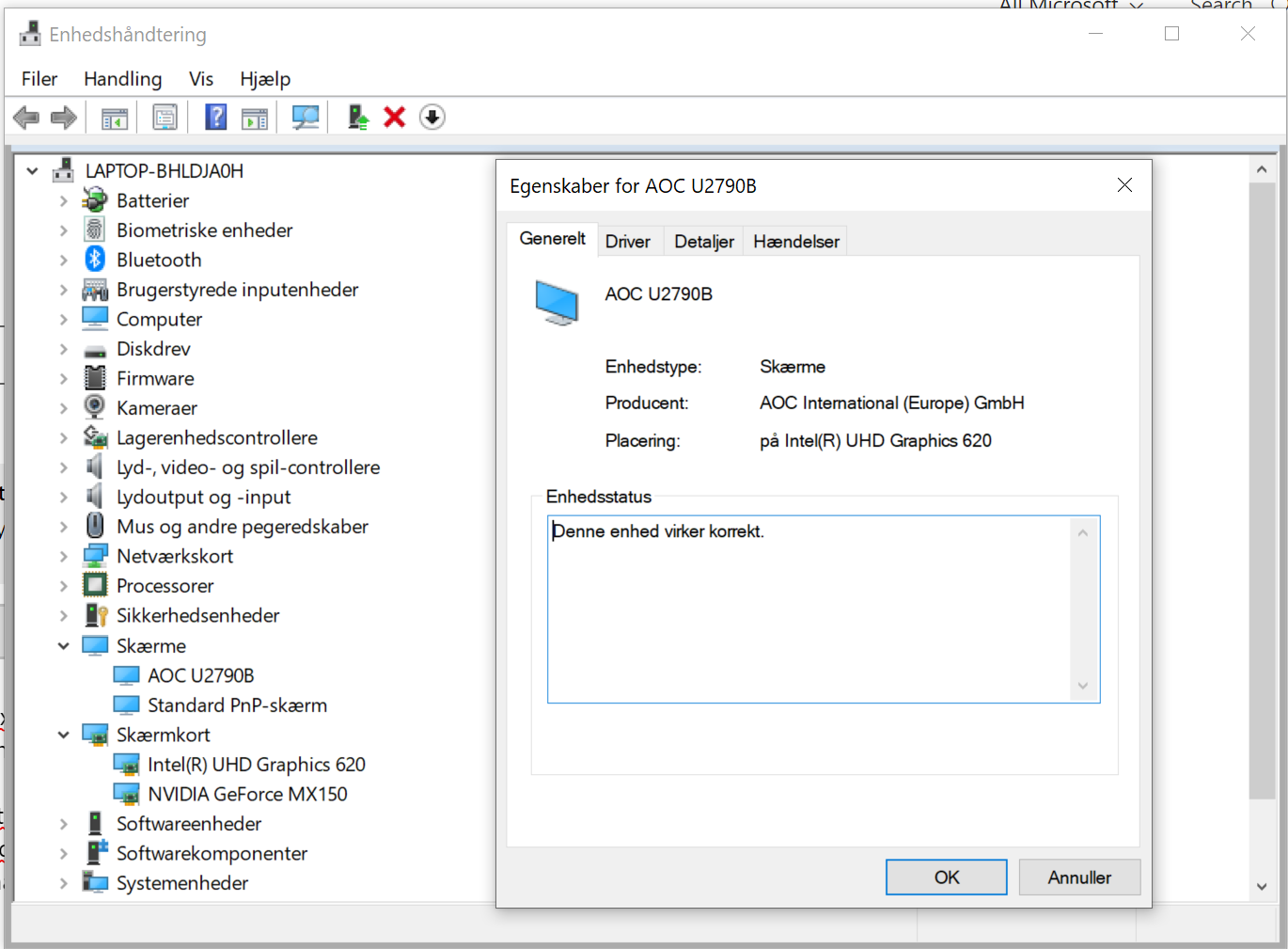

Device Manager --> Displays --> Right click AOC U2790B (my external monitor) --> Properties. Where it says "Placering" (Danish for Location), I want it to say Nvidia Geforce MX150

dxdiag for the external monitor:

Display Settings (possibly screen settings) --> Advanced Display Settings --> Properties for display Adapter

Background/what I really want to accomplish

I recently bought a new AOC U2790PQU-monitor, which is a 4k, 60Hz, 10-bit display (according to the spec sheet).

Windows, however, is telling me that it is only 8 bit, and I can't raise the framerate to 60Hz without lowering the resolution.

After doing some research, I think it might be because Intel UHD Graphics 620 (the integrated graphics card on my laptop) is creating a bottleneck/isn't fast enough to run the display to the max.

What I really want to accomplish, though, is just to get 4k 60Hz 10-bit, so if you have another idea for what might be the problem, I'd love to test it out.